Caching is a popular memory technique often associated with web browsers, databases, APIs, etc. But what is caching? What is cached data? What is cache memory? You must have come across these terms while clearing browsing data (like cookies).

In this blog, you will get all these questions answered. This blog will also introduce you to the various types of caches developed along with a mention of the popular in-memory data structure store, Redis cache.

All of us have come across the term ‘cache’ (pronounced “cash”) at some point while using a web browser or a mobile application. Some features like login access, accessing data in the app, etc. sometimes don’t work in the expected manner.

On doing a quick web search for its fix you are always suggested to clear your cache as the first solution.

So what is this cache and how exactly does the app get fixed on clearing the cache? This blog will answer all these questions related to ‘Cache’.

What is cache?

Cache is basically a type of hardware/software memory component.

The purpose of this storage layer is to store a subset of the primary data (pertinent data) so that any future requests to these data can be rendered faster and thus reducing the overall loading times of the application. This cache is generated during an earlier computation of the data or may also be a copy of previously retrieved data.

Oof. That was a bit of a nerdy way of explaining Cache. Let me illustrate an example to give you the motivation and idea that Cache is based on.

Consider your study table (at your house, college wherever). Now while you are studying you need to refer to some books. So you go to the library to refer to them and come back home. But later you realize that you need to refer to some of those books quite frequently. So you go to the library and issue some of these books and keep them on your study table.

The books on your study table represent the cache memory. While the book racks at the library represent the main storage memory. Accessing books from the library’s racks has a much better scope of finding books but it is a slow process.

On the other hand, using the essential books at your study table is much faster but has a limited scope. Now, as time passes the stack of books at your table keeps increasing owing to more books that you need. This stack may break your study cycle by not letting you concentrate on using the essential book that you used before. So what do you do? You clear some of the redundant books and keep only the needed ones; thus clearing your cache memory.

What is general caching? What is web caching and what does clearing cache do?

Caching can be categorized into two types - Browser (client) caching and Server caching. As the name suggests the caching occurs at the respective locations.

Browser caching

When a new webpage is displayed, a lot of files such as HTML, stylesheets, JavaScript files, images need to be downloaded. Browser cache stores some of these files so that when the website is loaded again, downloading of these files can be bypassed.

Try it Yourself

You can observe how browser caching helps by opening any ‘new’ website (i.e. some total new website like say for instance https://www.mapcrunch.com/).

Launch the site for the first time, allow it to load completely. Close the tab and relaunch that website. See any difference? The first time you visit a site, the browser will immediately cache some of the resources. And the next time you visit that site you will observe a significant reduction in loading times. The difference may not be that significant for us, but it surely is critical for the browser.

Server caching

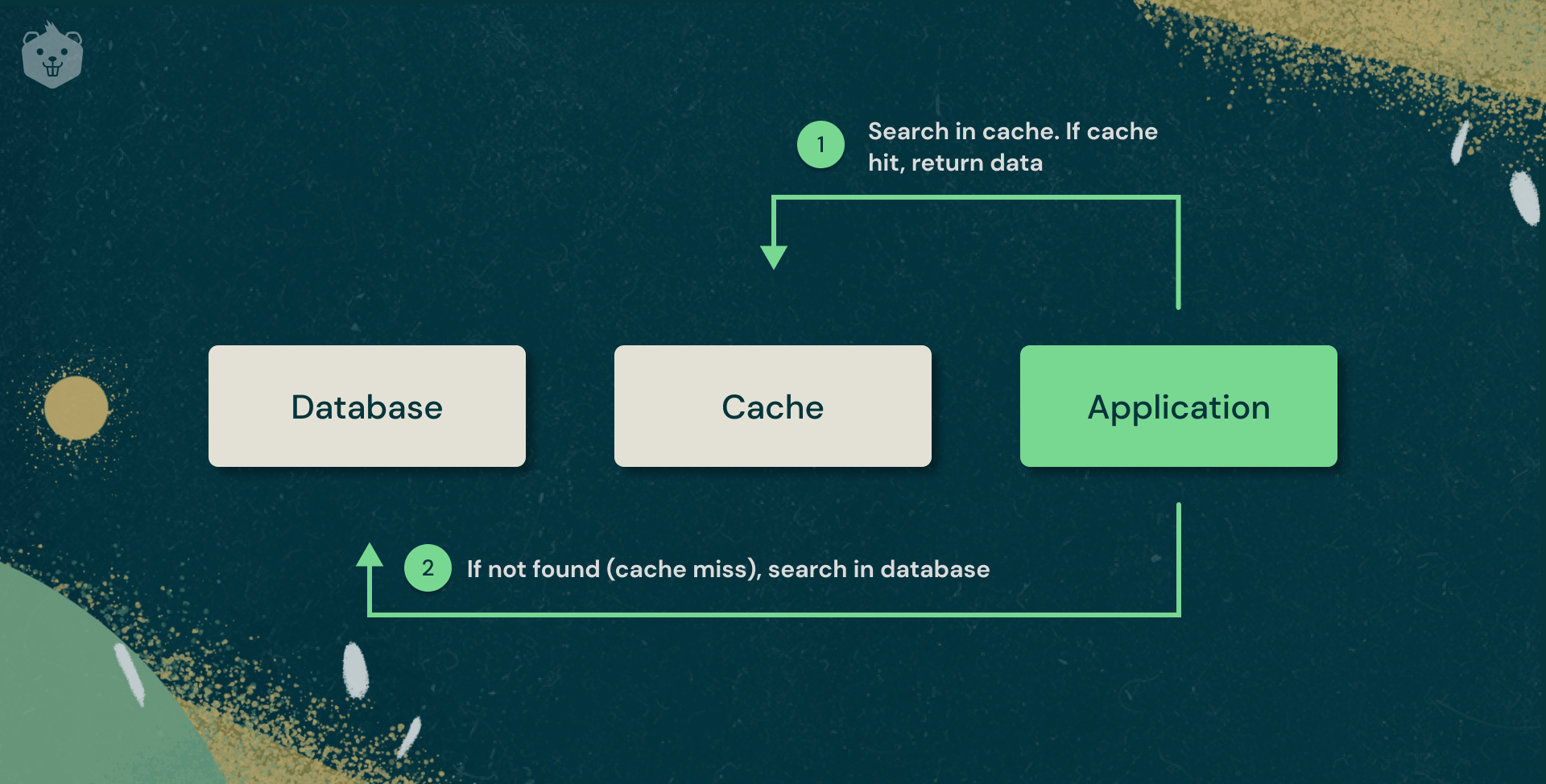

All modern-day web applications are pretty much dynamic and most of them have a database connection (server) to fetch data. Server caching stores some of the already processed requests/queries (to the database by the application). This helps in faster data retrieval and thus cutting downloading time of the application’s response.

Some server caching methods include ‘full-page cache’ and ‘object cache’.

Object cache is exactly what we have been discussing in this section. This cache stores the query made initially to the database so that any similar future queries can directly be fetched from the cache rather than the database storage.

Full-page cache relates to caching of some complex operations like the generation of a (new) navigation menu. More on this later on.

Where is cache stored?

Now you might be wondering where this cache gets stored? The answer’s pretty straightforward, it is the RAM (Random Access Memory).

A copy of the web’s contents (browser cache) gets stored locally on the machine's temporary read-write disk. Shutting off the machine leads to a loss of this copy and in turn deletion of this cache.

Advanced forms of caching include Redis, Memcached caching which also utilize RAM but operate in a more optimized manner.

Most of the key/value stores (Memcached and Redis) are capable of storing terabytes worth of data.

Redis is a great choice for storing data when disk-based durability is not a priority.

How long are these ‘files’ stored?

These files are stored by browsers until either the time to live (TTL) expires or the hard drive cache is full. It is for the latter case clearing cache is advised when some website/application doesn’t operate normally.

TTL (Time To Live) or hop limit works like a timestamp attached to the data. It takes care of the lifespan of data in a computer.

Clearing cache will reset the app to such a stage wherein it will be like the user has visited the site/app for the first time. Sometimes cache is incorrectly loaded which affects the loading of the website. Clearing the cache allows the cache to be correctly loaded again. An obvious consequent effect of clearing cache will be the slightly increased loading times.

Try it yourself

Visit a retail shopping site like Amazon, Flipkart, where the main web page’s content is rendered by converting the HTML files along with the stylesheets, JavaScript files, and this is stored in the cache. Other items like all product images and personalized credentials like login details etc. are also stored in the cache of such websites.

Clear your browser caches and head back to the retail site. For starters the site will load slower than before and also the retail site may ask you to log back in again and you may also have to set up your inside-site settings once again. Do give it a try as this won’t harm your routine browser usage in any grievous way.

Gauging Cache’s speed

In the evolution of computer technology, there has always been a trade-off between size and speed. Larger devices offer greater physical reach but often at the cost of low speed, like magnetic hard drives or DRAM (Dynamic RAM i.e. the traditional RAM, storage size varies in the range of megabytes to gigabytes).

Compared to costly, premium devices that have an optimum size but offer great speed like SRAM (Static RAM i.e. the new cache memory, storage size varies in the range of kilobytes to megabytes) or SSD. Two macro terms associated with these technologies are latency and throughput (bandwidth).

Latency

Latency is the time taken for an application to respond to some action the user has taken.

Technically network latency can be the delay in the network (/internet). Latency can never be completely eliminated due to the influence of distance and the quality of internet network equipment. Although minimizing it is totally plausible.

Bad latency affects the application/website in terms of poor performance which will eventually reduce the number of people using the application.

Now, for larger resources, the latency for accessing them is significantly high. So reading is done in large chunks hoping some part of it might be used soon or later.

Pre-fetching a set of predicted data is useful in reducing latency.

Cache acts as a buffer in these devices to reduce large distance fetching of resources to reduce latency.

Gamers might be familiar with the term latency as well as the term ‘ping’. Both represent the same thing. But ping is like a one-way time calculation, i.e. when the user does some action (like click a link) the network pings the application and then the application responds back.

The whole round trip time calculated is latency. And the value of latency/ping is a positive integer with units as milliseconds (like 31ms).

Throughput

Throughput is the rate at which something is processed, like a rate of production.

This is a common concept in communication networks where this is used as a measure of the performance of hard drives, RAMs, internet/network connections.

You must have observed the transfer rate of hard drives labeled as 50 Mbps or download speed of an internet connection as 25 Mbps or so. This is throughput (or bandwidth).

Cache allows higher throughput by accumulating finer small-scale transfers (that the resource is capable of) to more efficient large-scale requests.

Benefits of caching

- Better Application Performances

- Economical Rates for Database

- Optimized database's load distribution

- Predictable Utility Results

Let's look at each one by one.

Better Application Performances

Memory reading is many-fold faster than disk (magnetic like HDD or SSD) reading, using in-memory cache is extremely fast. This tremendous data fetching speed improves the overall performance of the application.

Economical Rates for Database

Database instances can at times be costly, especially if the database charges per throughput. Cache instances can cut down the need of some of the many database instances by providing a huge number of IOPS (Input/output operations per second) and this also helps in minimizing latency as well.

In-memory systems are much better than some comparable disk-based databases as the latter offers much higher request rates i.e. more Input/Output Operations per second (IOPS). For example, a standalone database instance used as a distributed side-cache can serve millions of requests per second.

Optimized database's load distribution

Caching makes use of the in-memory storage layer which redirects the load (reading/accessing the database) from the main backend database. This helps the database to perform better in times of high load and also caching keeps the database safe from crashing during network request spikes.

Predictable Utility Results

This is a practical challenge faced by popular apps like social media apps or retail (eCommerce) websites. Like during the Champions League or Apple announcing its new iPhone, social media platforms like Twitter, Facebook’s database take a heavy load of read requests. The same goes for eCommerce sites like Amazon, Flipkart during the Christmas sale.

This results in higher latencies and the performance of the app thus becomes unpredictable. By employing high throughput in-memory cache this bottleneck situation can be alleviated.

Let’s first understand how cache memory works in a computer at the hardware level in the next section. Then we’ll get back to the general caching concepts.

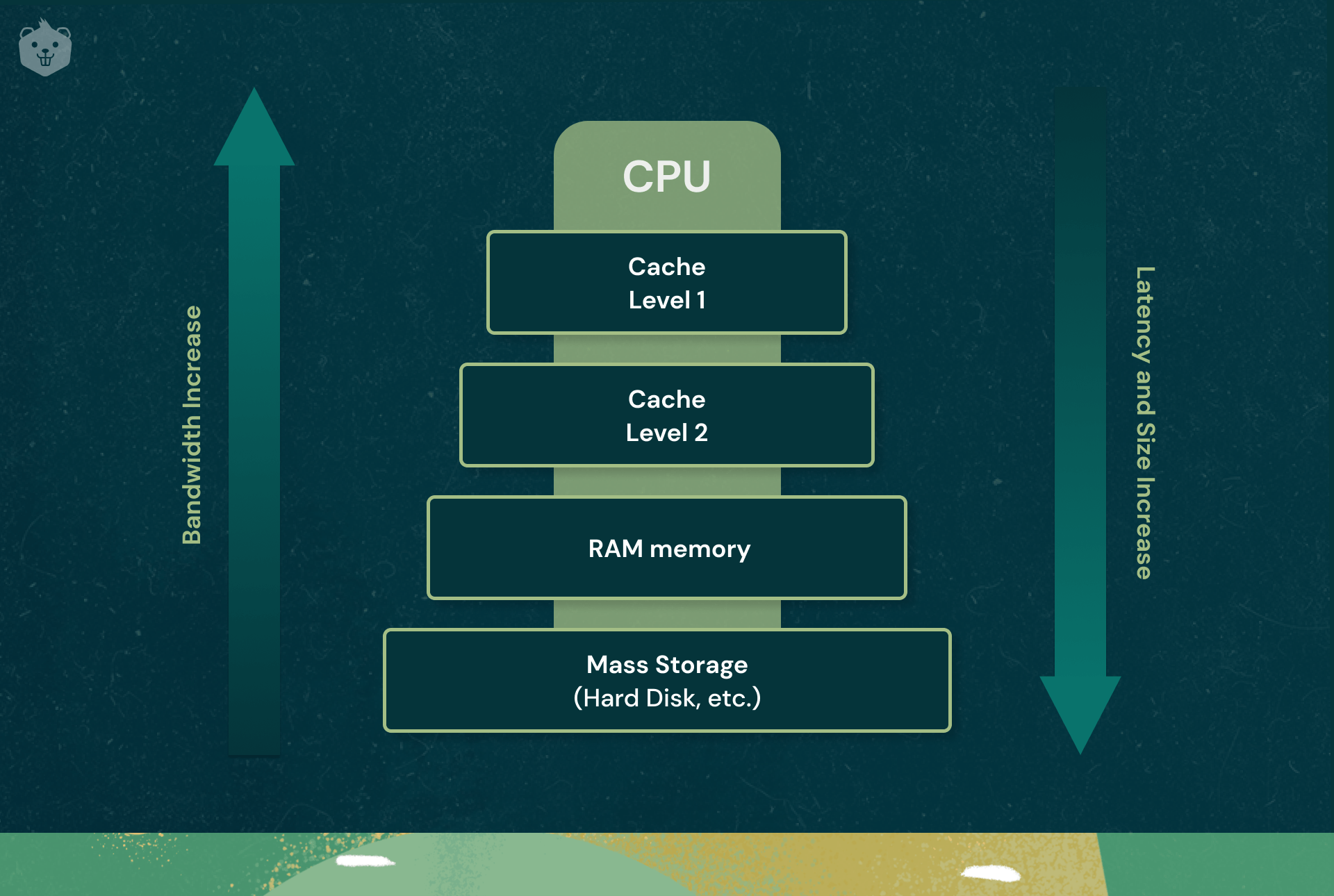

How CPU architecture (memory) is utilized for caching

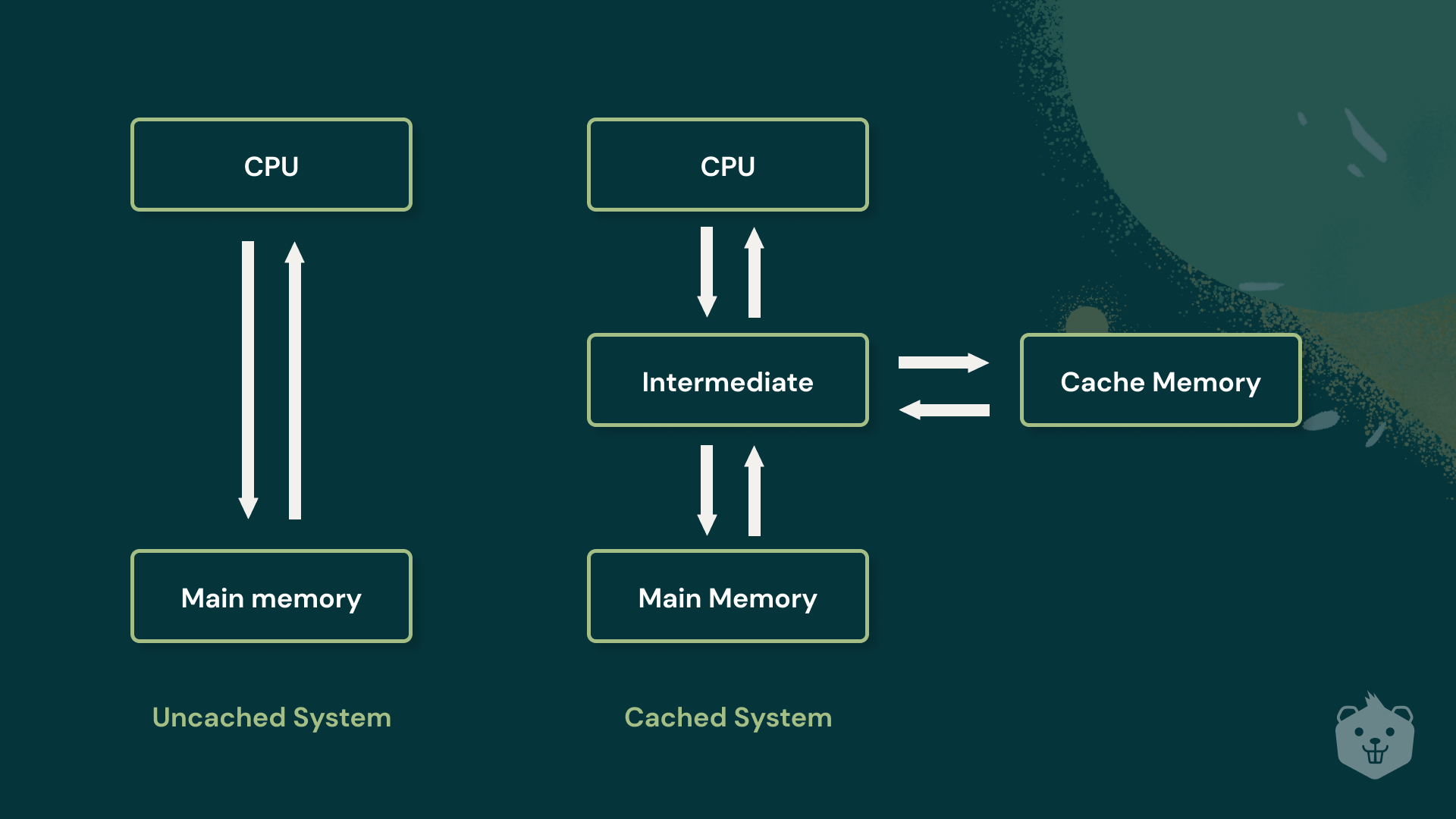

Very early days PCs did not have the concept of cache memory in them. So all data transfer occurring would be directly between the CPU and the main storage device, which intuitively you can conclude is a time-consuming process. A basic flow diagram explaining an "un-cached" and cached system is as follows:

Cache memory is an extremely high-speed data storage layer that basically acts as a buffer between RAM and CPU.

Cache’s primary purpose is to increase data retrieval performance. This is achieved by cutting off the recurrent need to access the slower storage layer by already storing it in the cache memory. And since cache memory is transient in nature, it is ideally integrated within fast access memory hardware (like RAM memory).

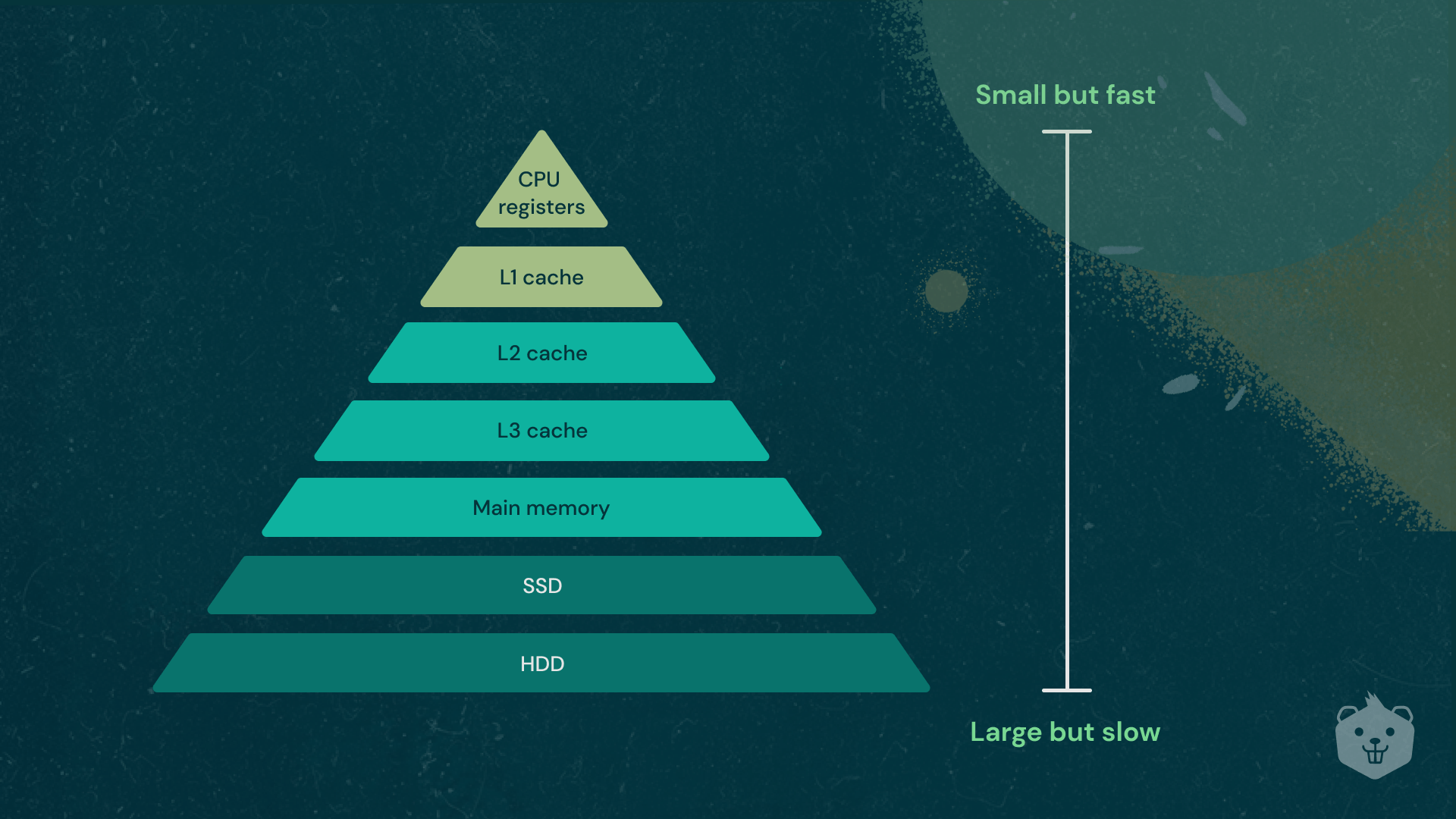

The hierarchy of the memory layer shown above goes like this:

Tier 1: Registers are quick-access storage locations in the CPU. They usually have a small amount of fast storage but are majorly specific to read-only or write-only type data storage.

Tier-2: Cache memory layer has 3 sublayers, namely L1, L2, and L3.

L1 cache, or primary cache is small and embedded in the processor chip. This enables L1 to be an extremely fast CPU cache.

L2 and L3 are extra cache layers built between CPU and RAM. These are bigger than L1 and thus take a bit longer for access than L1. More the amount of L2 and L3 available faster will be the computer.

Also, L3 is a specialized memory to improve the performance of L1 and L2.

All 3 layers collectively contribute to the cache memory that stores the subset of frequently requested data (as discussed before).

Tier 3: This is the main memory (remaining part of the RAM) on which the computer works in the ON condition. Once power is shut off the data in this memory is also erased. Its size is larger than earlier mentioned tiers of memory but is small compared to the next tier of memory.

Tier 4: A computer’s SSD (if available) and HDD are the secondary memory or the main storage center of the computer. This can also be available in the form of external memory (portable HDD/SSD). These are large in size but at the same time slower in terms of data fetching as compared to earlier tiers of memory.

Is Cached Data Important?

The simple answer is YES. Cached data promotes faster and efficient site loading times. People will prefer websites that load fast and give accurate results. Owing to good user experience and website attraction, caching plays an important role in keeping the website fast at all times.

But there is also a downside to cached data.

Debugging is a challenge due to the transient nature of caches. It clouds the matter in an extra layer of mystery. Cached data may expose confidential and sensitive information like session tokens, authentication data, browsing history by making them vulnerable if the browser has been left open for too long or some untrusted party has access to the browser. But this is a risk that is sensible to take!

The clearing procedure of cache varies from device to device, and it is no rocket science.

Try it yourself

A typical approach is to navigate to the “Clear Browsing History” option where you will be given a basic option to delete the cookies and site data, cache files. Choosing the cache clearing option will clear all sites' cache data and your visit to any site will be treated as the first visit by that site. For Google Chrome browser you need to head over to chrome://settings/clearBrowserData to clear your cache files (as shown in the image below)

Similarly, in mobile devices, the modus operandi is to navigate to the ‘Apps’ section in your settings ( in iOS it is located at ‘Storage & iCloud Usage’) where you can access a particular app's storage settings. In there you will be given basic options of deleting data (all of the app’s data) and deleting cache (only app’s cache).

Extend this mini-experience to other web browsers like Mozilla Firefox, Internet Explorer, Opera, etc., and also to other mobile phone OSs like Android, iOS, or maybe even windows (😬).

But should you clear this cache? If yes, then how often? Frequently clearing cache is not advisable and at the same time unnecessary.

Some situations where you should clear cache are as follows:

- If your system storage is running out of space it may happen so that your phone keeps lagging and you just can’t navigate around. Clearing cache here will give you some breathing room to at least temporarily use your phone like before.

- Sometimes some websites or apps may not function normally, most likely due to the incorrect storing of the cache files. Clearing cache here gives the website a fresh chance to download the cache files correctly this time. But then again, when you load the website just after clearing the cache it will take some time as the website treated you like your first visit to that website. But given a good hardware and internet connection, you may not just see the difference!

- One thing to be taken care of is that clearing cache may at times clear your auto-fill credentials, or your login details, etc. So make sure you have backed up such sensitive data before clearing your cache (and not app data).

Cache vs. cookies

You may have considered the two similar and yes they are similar in the sense that both store information on a particular machine. But, their ways of storing and purposes are quite different.

Browser cache stores pertinent data files to speed up your website, while cookies store specific information that allows the user’s behavior (on the internet) to be identified and tracked in a safe personalized manner.

Now, cookies or HTTP cookies are stored pretty much like cache memory while the user browses the site. Cookies are a reliable mechanism to track and record a user’s browsing activity to identify the user’s set of preferences and choices. These cookies aren’t dangerous per se, but many malicious websites may misuse your cookies on that website.

Cookies are good as they help you see personalized content on the internet and keep you away from things you don’t like on the internet. Deleting them is again just like cache, a matter of choice for you to take.

Where and how is caching used

Let’s understand some of the modern practical use cases of caching:

General Cache

Often there are quite many use-cases that do not need disk-based data support. Using in-memory storage layer (as a standalone database) is much faster and thus can be utilized to produce highly performant applications. Cache provides both speed and high throughput at a cost-effective price point. Product groupings, category listings, profile information, and other referenceable data are excellent use cases for a generic cache.

Application Programming Interfaces (APIs)

Most of the web apps today are built on APIs. When designing an API, it's vital to consider the API's expected load, authorization, the impact of version changes on API users, and, most crucially, the API's ease of use, among other aspects. Responding with a cached result of the API can sometimes be the most effective and cost-effective response.

Session Management

User data communicated between your site's users and your web apps, such as login information, shopping cart lists, previously seen items, and so on, are stored in HTTP sessions. Managing your HTTP sessions properly by storing your users' preferences and delivering rich user context is critical to offering exceptional user experiences on your website and using a centralized session management data store (a type of cache) is the ideal solution.

Content Delivery Network (CDN)

A content delivery network (CDN) is a geographically dispersed group of servers that collaborate to deliver Internet content quickly. A CDN enables the rapid distribution of assets such as HTML pages, JavaScript files, stylesheets, pictures, and videos that are required for loading Internet content.

Many websites find that standard hosting services fail to meet their performance requirements, which is why they turn to CDNs.

CDNs are a popular solution for relieving some of the primary pain points associated with traditional web hosting by leveraging caching to minimize hosting capacity, assist prevent service outages, and improve security.

CDN services can handle many of the large traffic sites like Amazon, Facebook, Netflix.

Domain Name System (DNS) Caching

To speed up website loading, web browsers cache HTML files, JavaScript, and pictures, while DNS servers cache DNS records for faster lookups and CDN servers cache material to reduce latency.

DNS caching is carried out on DNS servers. The servers cache recent DNS lookups so that they don't have to query nameservers and can respond quickly with a domain's IP address.

Search engines cache frequently appearing web pages in search results so that they can quickly respond to search queries even if the website they are attempting to access is down or unavailable.

Database caching

A use-case of database caching is query acceleration, which stores the results of a difficult database query in the cache. Complex queries that perform operations like grouping and ordering can take a long time to finish. If queries are executed repeatedly, as in the case of a business intelligence (BI) dashboard with numerous users, keeping the answers in a cache would improve the dashboard's responsiveness.

Applications can eliminate delays caused by frequent database accesses by substituting a portion of database reads with cache reads. This use case is typically found in environments where a high volume of data accesses are seen, like in a high-traffic website that features dynamic content from a database.

Final Thoughts

The Art of Caching is to deliver improved efficiency, reliability, and performance of a company/website's cache infrastructure.

Although this blog has not covered any of the core technicalities of caching, I hope the purpose and importance of cache have been elucidated.

Let us know in the comments what more about caching you would like us to shed light on.

Till then explore various cache technologies and equip yourself for our next edition.